Hello Echo

Using waves as an extended tactile sense thanks to reflection

If we throw a ball against a wall, it bounces back. The same happens with waves when they encounter an obstacle.

This is why we hear echoes. When a wave is reflected, it changes: information about the obstacle is transferred to

it and can be analysed.

Feeling Beneath the Surface

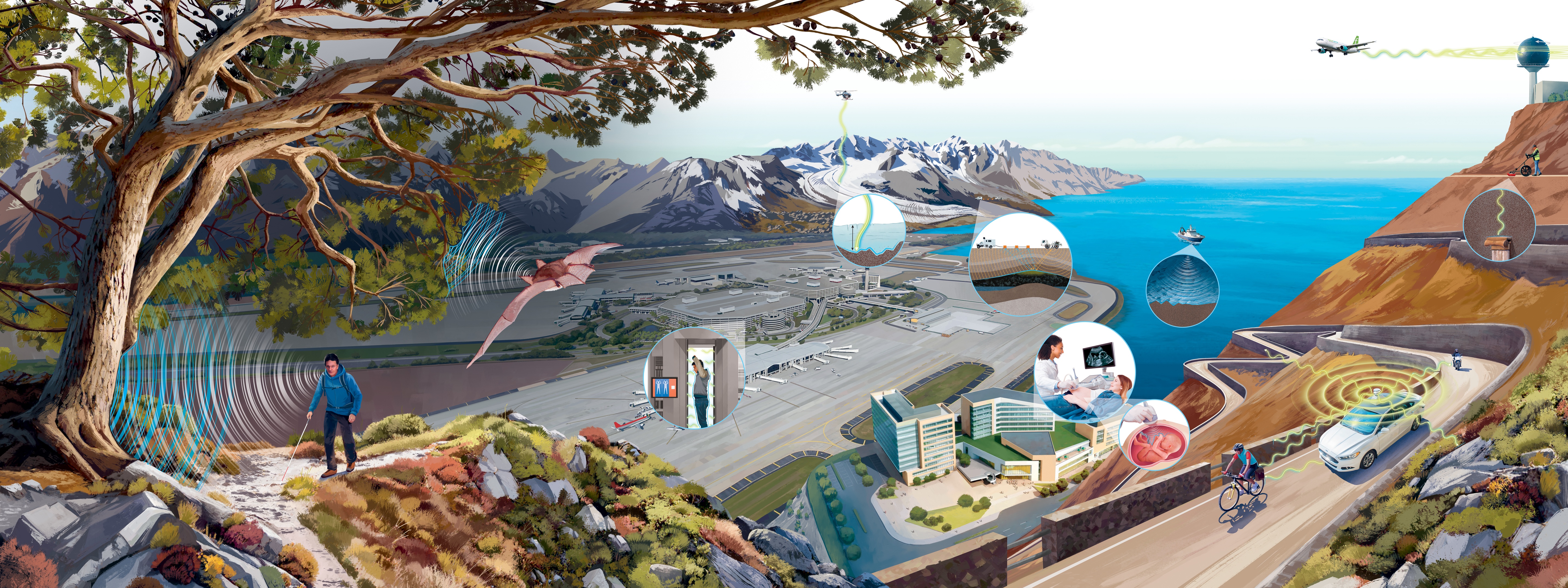

We cannot see into our body from the outside because we are not translucent. However, with suitable waves and their reflections, we can ‘feel’ inside. Medical professionals can send ultrasound waves into the body through the skin. These waves are reflected wherever the tissue changes. We can detect a baby in the mother’s womb because its environment – the amniotic fluid – is liquid and the baby is not. Surveying the seafloor with sound waves (sonar) from a ship works in the same way: the sound waves sent through the water are reflected by the solid ground.

Body scanners at airports scan passengers with electromagnetic waves. The waves penetrate clothing, but are reflected off the skin or, for example, a penknife left in a trouser pocket.

The greater the difference between two materials, the stronger the reflection. This fact is also used for resource exploration: waves are generated at the Earth’s surface and reflected by rock layers in the Earth. A boundary that is particularly well detectable with such seismic waves is the transition between rock and crude oil.

Groundpenetrating radar allows us to map what lies underground in the same way. For example, its radio waves can be used to investigate archaeological sites. Groundpenetrating radar can also be used from the air, for example to determine the thickness of glacial ice. The data obtained help to document the effects of climate change.

Navigating With All-Round Perception

For air traffic control, it is important to know exactly where aircraft are located. That is why a flight radar scans the sky with radio waves. In autonomous driving, the car itself scans its surroundings. Here, lidar and radar are the main tools for navigation and distance measurement. The small wavelengths of lidar (mostly infrared lasers) can scan objects very precisely. They are used for allround vision within a radius of up to 200 metres. In contrast, the longer and farther-reaching wavelengths of radar (mostly radio waves) are better suited to assess movements. Since lidar also reacts to weather phenomena like falling snowflakes, it is still difficult to make autonomous vehicles all-weather capable.

And there are more challenges: How to process and interpret the huge amounts of data fast enough? How to make the best decisions when there is not enough data? Who is liable when an accident occurs? And how to prevent the car from getting hacked and controlled by others?

Seeing With Your Ears

Many animals use reflected sound waves for navigation (echolocation). Bats, for example, repeatedly emit short calls during their flight. These ultrasonic waves bounce off the objects in their environment. The returning echoes contain information about the objects, including direction, distance, size, shape, material and movement. This allows bats to detect obstacles and prey.

Daniel Kish has been blind since the age of one. He has learned to navigate with echolocation, similar to a bat: he makes clicking sounds with his tongue that reach many metres farther than his white cane. Each click gives him a brief ‘look’ at his surroundings. Repeated clicks create a detailed image in his head that even allows him to ride a bicycle. He also trains other visuallyimpaired people, giving them new possibilities to shape their lives.

Research has shown that echolocation signals are processed in the part of the brain responsible for vision. Although Daniel’s perception of his surroundings is blurrier and less detailed than would be possible with eyesight, he can still see in his own way.